Forecasting the way LLMs will be used is a difficult task due to the speed at which they are developed (multi-modality alone will drastically expand use cases). What LLMs have undoubtedly proven is their ability to transform natural language into data structures and algorithms. While this ability is largely confined to programmers, there are a multitude of start-ups and companies working on natural language interfaces to software. The importance of this should not be overlooked: given just a little bit of VBA knowledge entire companies have been run out of Excel.

My interest is in hardware and the physical world. In the case of software you seem to have this infinite expanse: your OS will happily tell you you have access to 2^64 bytes of memory. Much of the more ridiculous takes on ML derive from assuming this infinite abstract space. In the case of hardware its physical form defines its possible interconnections and possible behavior. In other words, it defines a domain.

For that reason I expect LLMs to interface with hardware systems through domain specific languages (DSLs). Programming languages like Python have a gigantic surface area while DSLs are greatly constrained. We should be able to pass in the entire DSL specification as part of the prompt to a model, greatly limiting the risk of hallucinations that result.

The following is a chat with GPT4 to show this works:

You will be translating natural language to a robotic arm domain specific language. A description of the DSL verbs follows:

SET_ANGLE motor_index motor_angle

This command sets a motor to a particular angle. motor_index is an integer from one to three. motor_angle is a float from 0 to 360 degrees.

PICK_UP item

This command instructs our policy planner to pick up a particular item. item is a string that the world model will recognize as an object.

RELEASE

This command opens the end effector.

MOVE_TO place

This command instructs our policy planner to move to a particular place. place is a string that the world model will recognize as a location.

NO_OP

This command does nothing. If a natural language query does not match any of the above, output NO_OP.

The user will give you commands in natural language. When you get a command, briefly explain which of the DSL verbs matches best. Then, output that command and its arguments in a code block.

USER:

Set the angle of motor 2 to a half turn.

The given command matches the DSL verb “SET_ANGLE” where the motor needs to be set to a particular angle.

A half turn means 180 degrees.

So, translating the command:

SET_ANGLE 2 180.0 USER:

Get me that apple.

The given command matches the DSL verb “PICK_UP” where an item needs to be picked up.

Translating the command:

PICK_UP apple USER:

Fly me to the moon, let me play among the stars.

The command seems to be inspired by the song “Fly Me to the Moon” and doesn’t directly match any of the robotic arm DSL verbs described.

Thus, the appropriate translation would be:

NO_OP USER:

Move to the trash. Release the apple.

The user’s command contains two distinct instructions. The first matches the DSL verb “MOVE_TO” and the second matches the DSL verb “RELEASE”.

Translating the commands:

MOVE_TO trash

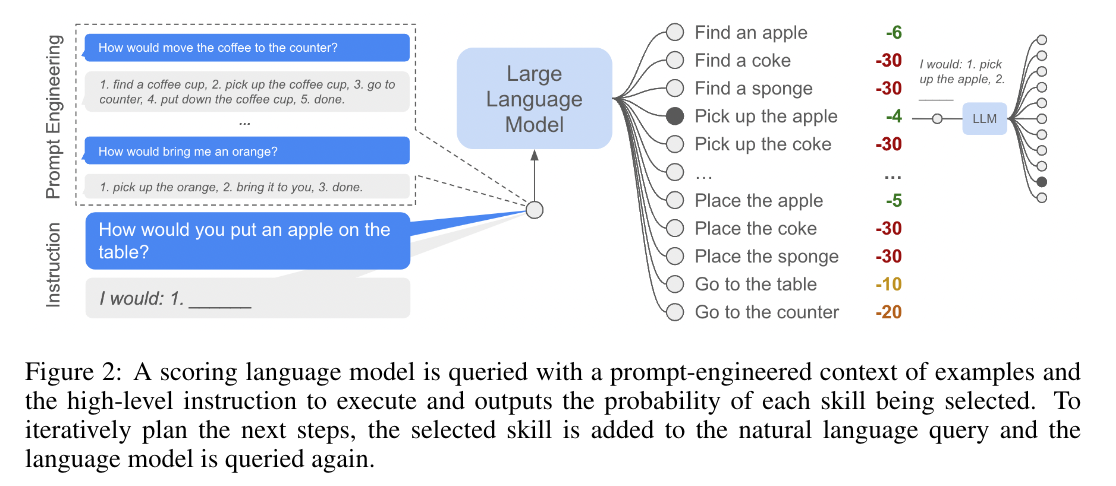

RELEASE While this example leaves a lot out in terms of implementation (how should the world model and policy planner translate natural language?), clearly this approach is feasible. A similar approach appears in Google’s SayCan paper, with the advantage of scoring the available affordances: